A Study on Sentiment, Length, and Readability in Movie Reviews

This study analyzes how textual metrics such as Sentiment Polarity, Review Length, and Readability Score affect user engagement in Amazon Movie Reviews.

Background

Online consumer reviews are a cornerstone of modern decision-making, especially on platforms like Amazon and IMDb, where millions of reviews guide choices for

movies and TV shows. These platforms often include helpfulness voting mechanisms, allowing users to indicate a review’s usefulness. Despite their importance,

the factors driving perceived helpfulness—such as review length, sentiment, and readability—remain underexplored. This study focuses on the movie and TV show

domain to uncover how these elements influence engagement, building on prior research suggesting that emotional intensity, review depth, and clarity play key

roles.

Understanding these dynamics is vital for optimizing review visibility, enhancing consumer decisions, and empowering content creators to write impactful reviews.

It also contributes to broader insights into digital consumer behavior and natural language processing.

Research Question

What factors influence the likelihood of receiving a higher overall voting/perceived helpfulness in movie and TV show reviews?

Hypothesis

- H1: The readability of a review has a curvilinear relationship with perceived helpfulness—reviews with moderate readability scores receive more votes, while very low or very high readability reduces vote counts.

- H2: The length of a review has a curvilinear relationship with perceived helpfulness—short reviews receive fewer votes, vote counts increase with length up to a threshold, then decrease for overly long reviews.

- H3: Reviews with strongly positive or negative sentiments receive higher vote counts compared to neutral sentiment reviews.

These hypotheses are grounded in prior studies suggesting that extreme sentiments engage readers emotionally, while balanced length and readability optimize informativeness and accessibility.

Dataset

The study uses the Amazon Review/Product Dataset from UCSD, spanning May 1996 to October 2018, with 233.1 million reviews across categories. This project focuses on the "Movies and TV Shows" subset, pre-processed to include 10,419 reviews after filtering out rows with missing votes and extreme lengths (under 10 or over 1024 words).

Methodology

This study employs a comprehensive pipeline: Creating corpus and summarization, Tokenization and data pre-processing, Creating DFM and Feature Co-occurrence matrix, Wordclouds, Readability Scoring, Review Length, Sentiment Analysis, Topic Modelling using Latent Dirichlet Allocation (LDA), and Negative Binomial regression to analyze review helpfulness.

Data Pre-processing

- Loaded 50,000 reviews, filtered to 10,419 after removing missing votes and extreme lengths.

- Calculated review length and tokenized text using R libraries like

quantedaandtidytext.

Readability, Length, and Sentiment Analysis

- Readability was assessed with Flesch-Kincaid scores using

textstat_readability. - Review lengths were grouped into bins of 50 words (e.g., 1–50, 51–100, etc.), and the total helpfulness votes for each bin were calculated and plotted to analyze the trend of votes as review length increases.

- Sentiment polarity was computed using dictionary-based methods and NRC Word-Emotion Association lexicon from packages

SentimentAnalysis, and compared it withliwcalike.

Statistical Modeling

Due to overdispersion in vote counts (mean: 7.97, variance: 513.12), a Negative Binomial regression model was used to evaluate the effects of length, polarity, and readability on votes.

Graphs and Plots

Below are visualizations illustrating key insights from the analysis.

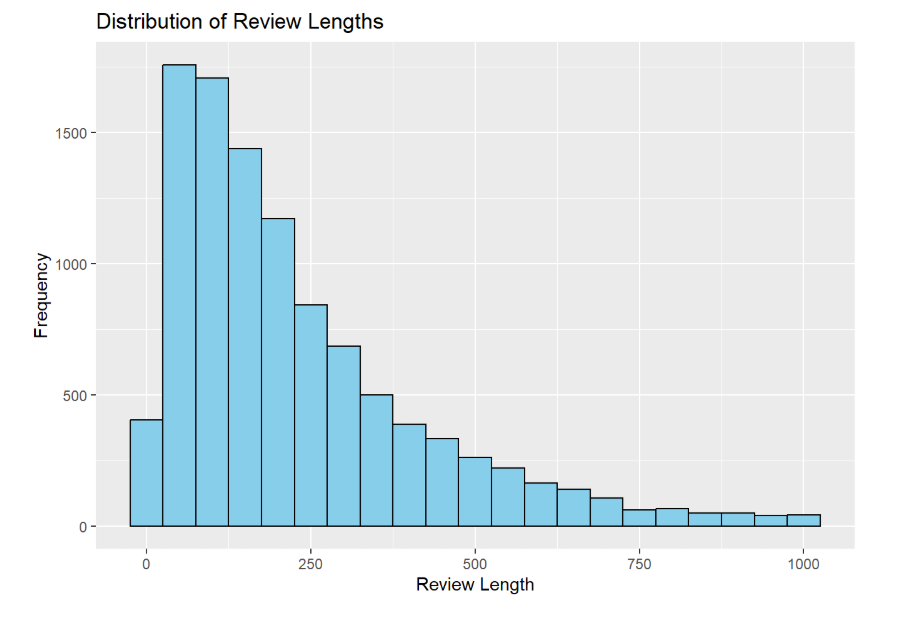

Figure 1: Distribution of Review Lengths shows most reviews fall between 88 and 310 words, with a median of 171, supporting the hypothesis of an optimal length range.

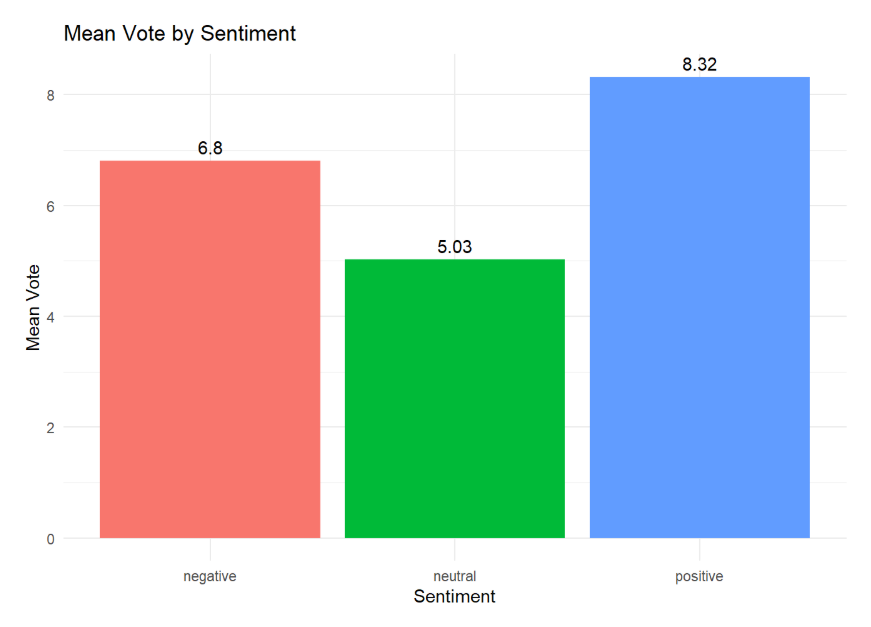

Figure 2: Mean Vote by Sentiment reveals positive (8.32) and negative (6.8) reviews receive higher votes than neutral ones (5.03), aligning with H3.

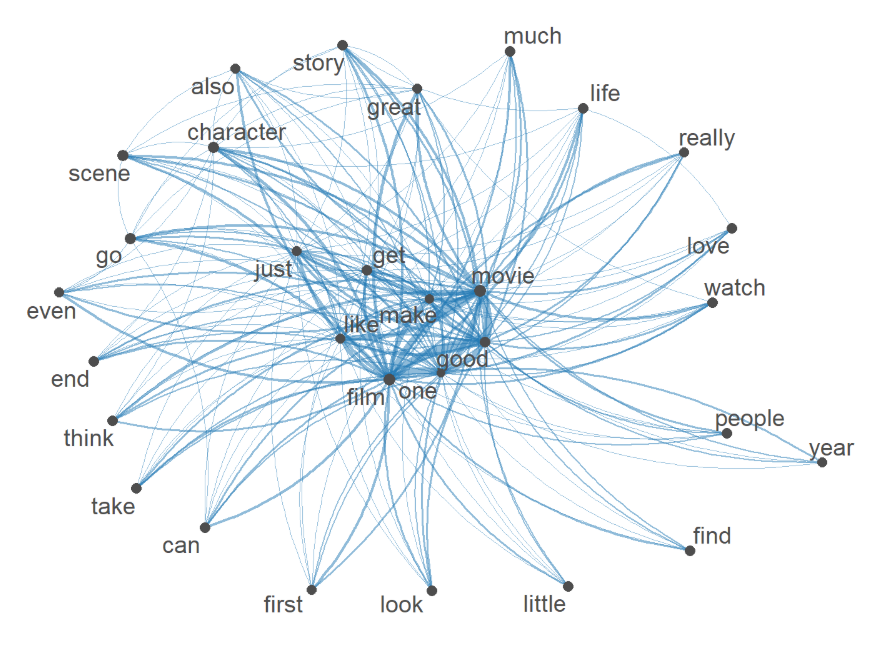

Figure 3: Document-Feature Matrix (DFM) and Feature Co-occurrence Matrix (FCM) illustrate word frequency and relationships, highlighting common terms like "film," "movie," and "good" that drive review content, while words like "first," "look," or "little," have fewer connections.

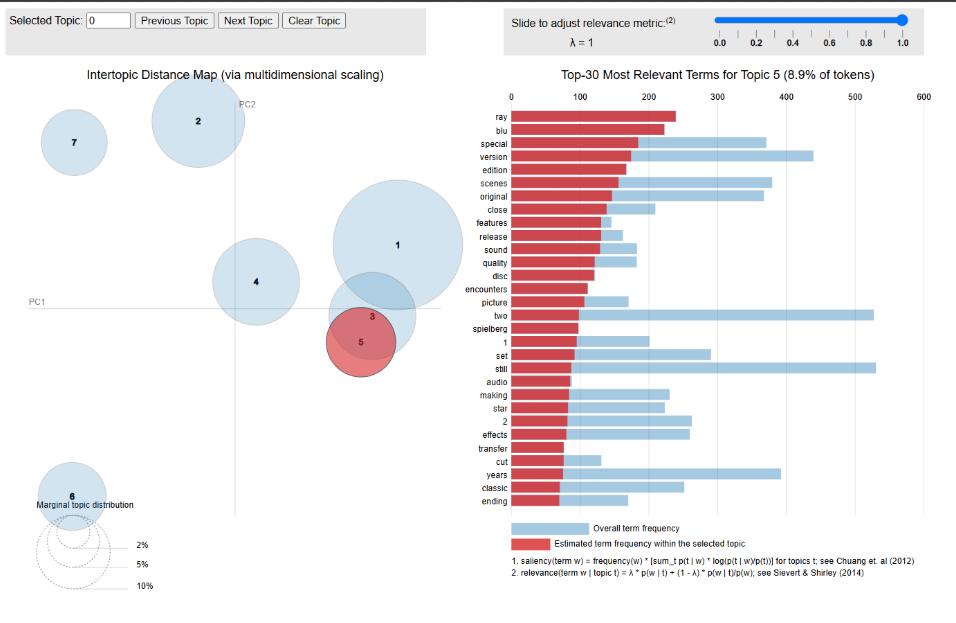

Figure 4: Topic Modelling with LDA reveals seven distinct topics in reviews (e.g., action films, horror, family stories), showing thematic diversity that may influence perceived helpfulness.

Results and Findings

- Readability: Coefficient 0.0062 (p < 0.001) suggests higher readability increases votes, with evidence of a curvilinear peak.

- Review Length: Coefficient 0.0022 (p < 0.001) indicates a significant positive effect on votes, though curvilinear trends suggest an optimal range.

- Polarity: Coefficient 0.0027 (p = 0.385) shows no significant direct effect, but bar plots confirm extreme sentiments (positive: 8.32, negative: 6.8) outperform neutral (5.03), supporting H3.

These findings validate H1 and H2 partially (curvilinear trends observed) and H3 fully (extreme sentiments drive engagement).

Conclusion

This study reveals that review length and readability significantly influence helpfulness votes, with optimal ranges enhancing engagement, while extreme sentiments (positive or negative) consistently outperform neutral ones. These insights can guide reviewers and platforms in crafting and prioritizing impactful content.

References

1. Dashtipour, K., et al. (2021). Sentiment analysis of Persian movie reviews using deep learning. Entropy, 23(5), 596.

2. Qaisar, S. M. (2020). Sentiment analysis of IMDb movie reviews using LSTM. ICCIS, 1-4.

3. Kumar, S., et al. (2020). Movie recommendation system using sentiment analysis. IEEE Transactions on Computational Social Systems, 7(4), 915-923.

4. Daeli, N. O. F., & Adiwijaya, A. (2020). Sentiment analysis on movie reviews using Information Gain and KNN. Journal of Data Science and Its Applications, 3(1), 1-7.